Step 3 in building online applications: Make it easy and efficient for evaluators

In our previous entries, we examined the importance of making online applications easy to use through a smart and straightforward layout, and how to best present questions to guide applicants through the process. The third and final step puts the focus on the evaluator — the individual or committee that is reviewing the applications.

Once all the applications have been collected, analyzing the information is the next big task. Here’s a look at how to navigate it.

Stepping into analysis: Using quantitative questions during the application process is key to making the information easier to examine. The formatted results can break down a variety of applicant information. For example, a scholarship organization can have an applicant’s gender, grade-point average, SAT/ACT scores and parental income ranges listed as number and multiple choice questions so that they can easily be compared to other applicants. It’s also important to hide applicants’ sensitive information, that which wouldn’t be necessary for evaluation, like home addresses and phone numbers.

Formatting for evaluators: Just as the process should be easy for applicants, so it should be for evaluators. Take the scholarship example: Many of these kinds of applications will require that students write and submit an essay. This strays from the simplicity of multiple choice questions, but it’s a necessary part of such an application.

Those who are examining each application should be able to use the Likert scale. This allows evaluators to give a qualitative assessment of an essay, which can then be converted to quantitative data to make for easier analysis. As defined by BusinessDictionary.com: “… Likert scales usually have five potential choices (strongly agree, agree, neutral, disagree, strongly disagree) but sometimes go up to ten or more. The final average score represents the overall level of accomplishment or attitude toward the subject matter.”

Points can be used to reflect the Likert scale ratings (as in five to nine points for “adequate,” 10 to 13 points for “good,” 14 to 16 points for “excellent”) so that a subjective element can turn into a number rating, which can then fall in line with the applicant’s grades and test scores. The evaluators should also be able to add comments in a special field for any notes on the essay that can’t be explained by a number rating.

Autoscoring: Other data can be converted into a number through autoscoring. Grade-point averages are a good example. Because the numbers can vary significantly, including decimal numbers, autoscoring assigns a value. If a student has a 3.5 or higher GPA, for instance, he or she can be assigned five points. A GPA between 3.5 and 3.25 gets four points, a GPA between 3.25 and 3 gets three points, and so on. Those points can then be easily analyzed along with the other data.

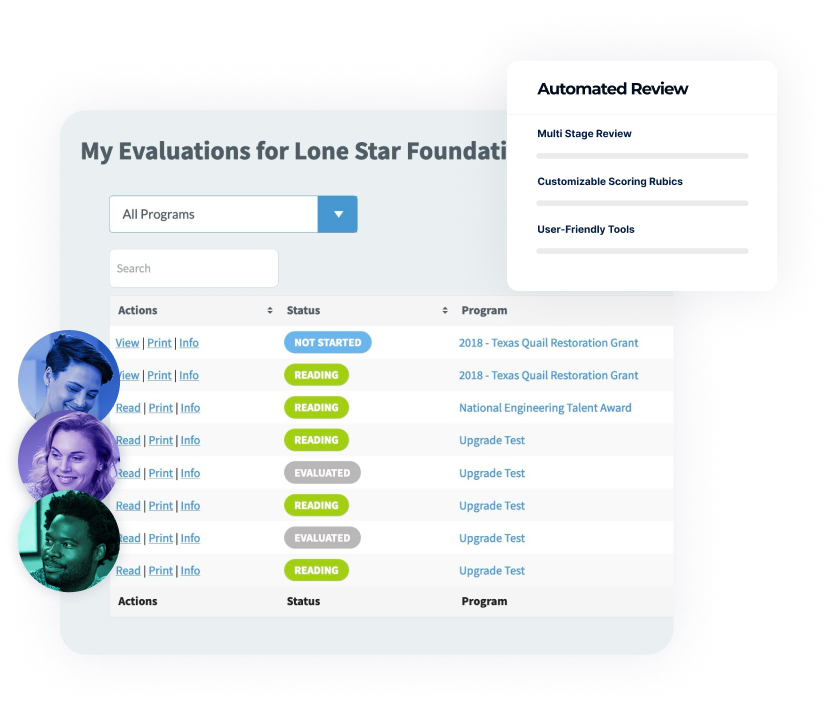

Custom columns for evaluators: Another way to make things easier for evaluators is to format the results of applications already reviewed. In the scholarship example, this could mean seeing the student name and school listed along with ratings on the essay, recommendations submitted on behalf of the applicant and an overall rating. This also allows noting which applications are still pending, so that they don’t slip through the cracks in the evaluation process.

Share evaluator answers: Because multiple people may be weighing in on each application, it can be helpful to see some of those other ratings. The format can include each evaluator’s general feedback, descriptions of strengths and weaknesses and comments about the essay, along with the overall ratings of the applicant, essay and recommendations.

Send reminders to evaluators: Efficiency is naturally an important element of the application process. Evaluators may need reminders about those pending applications, especially when a deadline is established and approaching. These reminders can come in the form of a simple, formatted email or text message that notes the name of the application, re-emphasizes the deadline and provides an easy link back to the evaluations.

This brings our three-part series to a close. As always, stay tuned to our website for more news, notes and helpful hints from the SmarterSelect team.

FAQ's

1. How can I make it easy and efficient for evaluators to review submissions of online applications?

Once all the applications have been collected, analyzing the information is the next big task. Using quantitative questions during the application process is key to making the information easier to examine.

2. How can I go about sending reminders to evaluators?

Evaluators may need reminders about those pending applications, especially when a deadline is established and approaching. These reminders can come in the form of a simple, formatted email or text message that notes the name of the application, re-emphasizes the deadline and provides an easy link back to the evaluations.